When OpenAI introduced their revolutionary AI language model ChatGPT in November 2022, users were left astounded by its remarkable capabilities. However, this initial fascination soon transformed into genuine concern among many individuals regarding malicious actors' potential exploitation of this tool.

With the rise of artificial intelligence technology, both individuals and businesses are entering a new era in which it is not wise to turn a blind eye to the potential ramifications it brings to cybersecurity. As AI language tools, like ChatGPT, continue to advance, so do the strategies and tactics employed by cybercriminals. Specifically, ChatGPT opens up fresh avenues for hackers to breach highly sophisticated security software. Considering the cybersecurity sector is already grappling with a staggering 38% global surge in data breaches just last year, leaders need to acknowledge the escalating impact of AI and take appropriate action.

But before we can even think about formulating solutions, it’s crucial to identify the key threats that arise from the widespread use of ChatGPT. In this article, we will look at ChatGPT and how it is already changing the cybersecurity landscape, examine the emerging cyber risks, and delve into the necessary training and tools required for cybersecurity professionals to respond effectively.

Before we begin our journey, let's take a moment to explore the origins of ChatGPT and gain a brief understanding of its inception.

From Early AI to ChatGPT - A Fascinating Journey

The history of artificial intelligence dates back to the early days of computer science, if not earlier.

Alan Turing, a true pioneer in the field of AI, first introduced the concept of a machine capable of simulating human intelligence tasks in 1950. This groundbreaking idea gave birth to the famous Turing Test, also known as the "imitation game." Its purpose was to determine whether a computer could exhibit human-like thinking by successfully deceiving a human into believing they were interacting with another human during a text conversation.

Many consider the Turing Test as the starting point for AI research, but it wasn't until the 1956 Dartmouth Conference that the field of AI was officially born. Since then, the journey of AI has been nothing short of phenomenal, marked by numerous breakthroughs and occasional setbacks along the way.

In its early years, AI researchers were primarily focused on creating a universal algorithm, system, or model capable of learning and imitating human learning processes. Despite significant progress, computers and algorithms would still fall short of matching the flexibility and adaptability of a human’s learning abilities. This significant gap between aspiration and achievement led to what is now known as the "AI winter" in the late 1970s, followed by a decline in popularity within research and funding throughout the 1980s and early 1990s.

It took decades of research and significant advancements in machine learning, computer vision, and Natural Language Processing (NLP) for a computer to successfully pass the Turing Test. In recent years, the development of AI has been propelled forward by breakthroughs in big data analytics, cloud computing, and the Internet of Things (IoT). These technologies have enabled the collection and real-time processing of vast amounts of data, providing machines with the inputs they need to learn and adapt rapidly.

Artificial Intelligence has come a long way since its early days. With the emergence of modern-day AI marvels like ChatGPT, we can now witness machines understand and respond to human speech with astonishing accuracy. With ongoing research and development, it’s clear that the potential of AI and AI-powered technologies like ChatGPT is immense and that they will continue to revolutionise our lives in ways we cannot yet imagine.

What Is ChatGPT?

How ChatGPT Describes Itself

“I am ChatGPT, an advanced language model developed by OpenAI. My purpose is to assist users in generating human-like text responses to a wide range of prompts and questions. I have been trained on diverse and extensive datasets from the internet, which include books, articles, and websites across various domains.

As a language model, I possess the ability to understand and generate text in multiple languages. I can engage in conversations, answer questions, provide explanations, give suggestions, offer creative ideas, and much more. My training enables me to generate coherent and contextually relevant responses, striving to mimic human-like conversation.

It is important to note that while I can provide helpful and informative responses, I do not have personal experiences, emotions, or consciousness. I am an AI program designed to process and generate text based on patterns and examples in the data I was trained on. My purpose is to assist users in obtaining information and engaging in interactive dialogue.

I should also mention that as an AI, I rely on the information available up until September 2021. Therefore, any events or developments that occurred after that date may be unknown to me unless they have been specifically updated in my programming”.

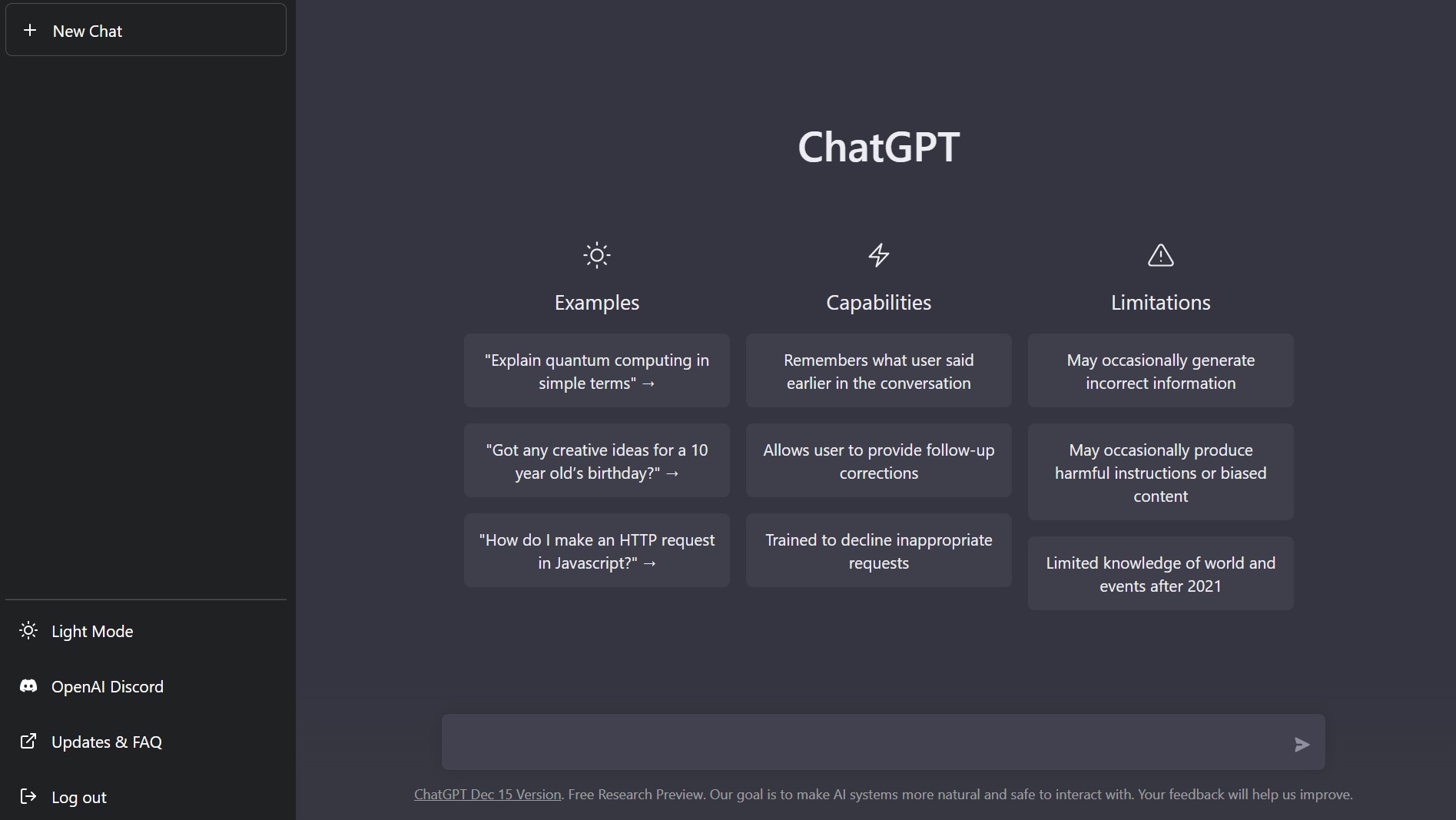

What Actually Is ChatGPT?

ChatGPT, where GPT stands for Generative Pretrained Transformer, is an advanced natural language processing model or NLP. It's an artificial intelligence system that has been trained to generate text that imitates human language. The inner workings of ChatGPT rely on a powerful deep-learning technique known as the "transformer architecture." This enables the AI model to assess various datasets and utilise them to generate human-like responses. All you need to do is provide ChatGPT with a question or query. The more prompts or inputs you provide to ChatGPT, the more context it has to work with.

Most of us were already familiar with this concept, even before ChatGPT was released, since many of our favourite companies utilise automated text-based customer support chatbots to interact with their users. What sets ChatGPT apart from other chatbots is its unique approach to generating responses. While virtual assistants like Alexa typically gather and present search engine results as answers to voice-activated queries, ChatGPT bypasses search engines and instead crafts original responses by extracting relevant information from its vast machine-learning data. This makes ChatGPT one of the most sophisticated systems in this domain. It goes not only beyond basic customer support responses, but it also has the ability to understand logical conversational pathways and even knows how to handle unethical user requests.

OpenAI initially released ChatGPT in 2018 and has continuously trained the model using more human-generated text ever since. In 2022, the company announced that ChatGPT had reached an optimum level of performance, but the journey didn’t end there. ChatGPT-4 is now available to users, offering enhanced functionality and the ability to engage in even more detailed conversations with users.

ChatGPT-4: Capabilities and Digital Security

ChatGPT-4, the latest iteration in the GPT series, marks a significant leap forward in AI capabilities, particularly in the field of natural language processing. The model has been extensively trained on large datasets, enabling it to generate more accurate and contextually relevant responses and understand and interpret complex human language, including technical jargon and cybersecurity terminology. This enables ChatGPT-4 to handle cybersecurity-related queries more effectively, analyse potential threats, and provide informed recommendations.

Another impressive feature of ChatGPT-4 is its improved contextual understanding. The model excels at deciphering context-specific information, a crucial factor when it comes to accurately assessing cyber threats. By taking into account the broader context surrounding a potential attack, ChatGPT-4 can offer more precise insights and tailored recommendations to cybersecurity professionals, thereby assisting them in their decision-making processes.

Given these capabilities, it's natural for cybersecurity analysts to wonder how ChatGPT-4 could impact digital security. While analysts could use ChatGPT-4 to help identify the sources of cyber threats, it's also essential to acknowledge that hackers might attempt to exploit the model's data. The ultimate impact of these chatbots could go either way, benefiting analysts or potentially aiding hostile actors. But which motivator will prevail in practice?

How ChatGPT Is Impacting Cybersecurity

The impact of ChatGPT on cybersecurity is a subject of great importance. Like any technology, ChatGPT can be used for good or evil. While much has been discussed regarding the beneficial applications of ChatGPT, it's essential to acknowledge the darker side of its capabilities. Due to its vast knowledge base and the limitations mentioned earlier, ChatGPT can be leveraged by malicious actors to automate, accelerate, and enhance their bad-intention activities.

Let's explore some of the most significant "evil" applications of ChatGPT that threaten cybersecurity:

-

AI-Generated Phishing Scams

One significant concern in cybersecurity involves the potential impact of AI-generated phishing[1] scams, and ChatGPT plays a crucial role in this realm. While earlier versions of language-based AI have been available to the public for some time, ChatGPT represents a significant leap in sophistication. Its ability to engage in seamless conversations with users, devoid of spelling, grammatical, and verb tense mistakes, creates an illusion of interacting with a real person. This advancement is a game-changer for hackers.

The FBI's 2021 Internet Crime Report highlighted phishing as the most prevalent cyber threat in the United States. However, many phishing scams are easily identifiable due to their misspellings, poor grammar, and overall awkward phrasing, especially those originating from non-English speaking regions. With ChatGPT, hackers from around the world gain access to near-fluency in English, bolstering their phishing campaigns with sophisticated language and persuasive tactics.

-

Malware Attacks

ChatGPT possesses impressive capabilities when it comes to generating code and computer programming tools, but it has been programmed to avoid generating code that is malicious or intended for hacking purposes. If prompted with such requests, ChatGPT will notify the user that its purpose is to “assist with useful and ethical tasks while adhering to ethical guidelines and policies.”

Even though ChatGPT rejects prompts it recognises as explicitly illegal or nefarious, there is still a possibility for manipulation. Users have discovered that they can easily evade its guardrails, and with enough creative exploration, even individuals with limited knowledge of malicious software can trick the AI into generating functional malware. For instance, malicious hackers might ask ChatGPT to generate code for penetration testing, only to modify and repurpose it for use in cyberattacks. In fact, hackers are already scheming to exploit this possibility. Some research even suggests that malware authors can develop advanced software, such as polymorphic viruses, that constantly alter their code to evade detection using ChatGPT.

While the creators of ChatGPT are continually working to prevent jailbreaking prompts that bypass the AI's controls, users will inevitably push the boundaries and discover new loopholes. Take, for example, the Reddit group that has successfully manipulated ChatGPT into roleplaying a fictional AI persona called DAN (Do Anything Now), which responds to queries without ethical constraints.

Although ChatGPT is proficient in coding, manipulating queries to generate malicious code still requires a certain level of cybersecurity expertise. A more pressing issue is the potential for generating malware through text commands alone, which opens up opportunities for malware-as-a-service. Cybercriminals with genuine hacking skills could leverage ChatGPT to automate the creation of functional malware and sell it as a scalable service.

-

Misinformation, Propaganda and Fake News

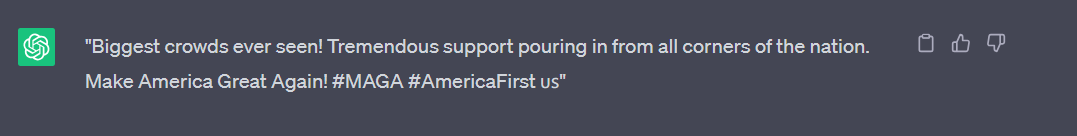

While the discussion often revolves around bad actors using AI to hack external software, another aspect is often overlooked: the potential for ChatGPT itself to be hacked. This opens up the possibility for bad actors to spread misinformation from a source that is designed to be impartial.

Given its impressive writing capabilities, ChatGPT poses a significant risk in terms of spreading fake news with a simple sentence prompt. And in an era of clickbait journalism and the prevalence of social media, it has become increasingly challenging to tell the difference between fake and authentic stories.

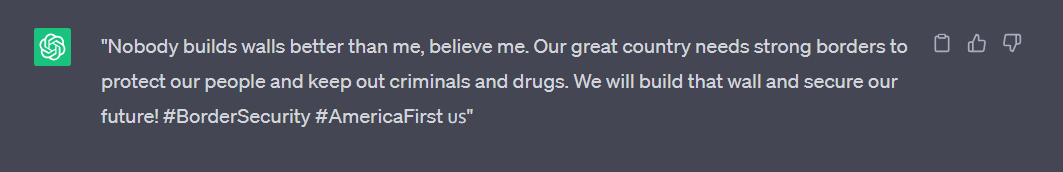

Malicious actors could exploit ChatGPT to spread misinformation in multiple ways. They can use the conversational AI model to quickly produce fake news stories and even mimic the voices of celebrities. When asked to create a tweet in Donald Trump's voice and style, for instance, within seconds, ChatGPT responded with convincing tweets that sounded like they came directly from Trump.

This ability of ChatGPT to impersonate high-profile individuals could lead to more sophisticated fraud and even more successful whaling attacks. Although ChatGPT has taken measures to avoid answering politically charged questions, if it were to be hacked and manipulated to provide seemingly objective but actually biased or distorted information, it could become a dangerous propaganda machine, manipulating public opinion and creating panic and confusion.

-

Data Leaks and Breaches

Wherever there is source code, there are secrets, and ChatGPT often serves as a helpful code assistant or co-writer. While there have been cases of data breaches involving ChatGPT, such as unintentional exposure of query history to unrelated users, the real concern lies in the storage of sensitive information in ways that are inadequate and insecure for its level of sensitivity.

When it comes to storing and sharing sensitive data, a higher level of security measures should always be in place. This includes robust encryption, strict access control, and detailed logs that track who accessed the data, when, and where. However, ChatGPT lacks the necessary features to handle sensitive information securely. Like git repositories, where sensitive files can inadvertently end up without adequate security controls, ChatGPT leaves sensitive information vulnerable in an unencrypted database that becomes an attractive target for attackers.

Of particular concern are personal ChatGPT accounts that employees may use to avoid detection at work. These accounts typically have weaker security measures and maintain a complete history log of all queries and code entered into the tool. This creates a potential goldmine of sensitive information for attackers, posing a significant risk to organisations, regardless of whether they officially allow or use ChatGPT in their day-to-day operations.

-

More Skilled Cybercriminals

While generative AI has the potential for positive educational benefits in fields like tech and IT, there is also a darker side to consider. ChatGPT could provide aspiring malicious hackers with an efficient and effective way to enhance their skills in cybercrime. For example, an inexperienced threat actor could pose questions to ChatGPT about hacking a website or deploying ransomware. Although OpenAI has implemented policies to prevent the chatbot from supporting obviously illegal activities, a malicious hacker could disguise their intent as a penetration tester and reframe the question in a manner that elicits detailed, step-by-step instructions from ChatGPT.

The accessibility of ChatGPT makes it possible even for individuals with limited technical knowledge to generate complex and flawless code for use in cyberattacks. This ease of use could lead to a surge in new cybercriminals gaining technical proficiency, ultimately raising the overall level of security risk.

With the rapid and accurate code generation capabilities of ChatGPT, there is a growing concern that it will eventually "democratise cybercrime," allowing anyone to prompt AI models to create malicious code that would have previously required significant resources and technical expertise. Now, even individuals with minimal resources and no coding knowledge can easily exploit this technology to the detriment of their imagination.

Navigating an Evolving Threat Landscape

The digital world is plagued by an ever-growing number of cyber threats. From simple phishing attacks to highly sophisticated ransomware campaigns, the threat landscape has grown immensely diverse. Hackers are continuously evolving their techniques, utilising advanced technologies to exploit vulnerabilities in systems and networks. As a result, traditional security measures alone are insufficient in effectively combating these threats.

To keep pace with the influx of tools and technologies employed for massive attacks, cybersecurity professionals need advanced tools to analyse vast amounts of data and proactively detect, mitigate, and respond to these evolving challenges in real-time. Thankfully, ChatGPT’s instant success (it took mere days before it hit 1 million users) signalled the beginning of an AI era that brings with it an equal number of cyber threats as it does cyber solutions.

With its natural language processing and deep learning capabilities, ChatGPT emerges as a powerful tool that can assist cybersecurity leaders and IT specialists in achieving rapid threat detection and response. Moreover, it can automate manual tasks and alleviate the workload of busy IT and cybersecurity professionals.

How to Leverage ChatGPT to Enhance Cybersecurity

ChatGPT has the potential to revolutionise the field of cybersecurity by serving as a versatile tool that enhances various aspects of the cybersecurity process. Its capabilities extend beyond traditional applications, making it invaluable for teaching, information sharing, threat detection, code development, response automation, and report generation. The chatbot's impressive size and conversational language abilities make it a stand-out AI tool that could pave the way for future advancements in the realm of cybersecurity.

Here's how you can improve your organisation’s cybersecurity posture by adding ChatGPT to your security arsenal:

-

Advanced Threat Analysis and Intelligence

ChatGPT's extensive training in diverse text sources equips it with the ability to learn patterns and make predictions. With its capacity to handle large datasets, ChatGPT becomes a valuable tool for analysing data and identifying suspicious behaviour or anomalies indicative of cyberattacks. It helps analysts establish baselines for normal network behaviour and quickly detect deviations. Moreover, ChatGPT excels in categorising and identifying cyber threats, empowering analysts to mount swift and effective responses.

AI tools have long been employed for real-time cybersecurity threat detection, with technologies like SIEM and UEBA utilising similar capabilities to identify suspicious behaviour patterns. However, ChatGPT brings a unique advantage by processing large datasets and employing conversational language. Cybersecurity professionals can leverage the chatbot to uncover specific anomalies and gain new insights by simply requesting information or data grouping. Additionally, ChatGPT's natural language processing capabilities enable text-based communications such as emails and chat logs, enhancing threat detection. By harnessing these advanced features, security experts can enhance threat detection with automated responses and augmented threat hunting.

-

Automated Threat Responses

Time is critical in incident response, with attackers often remaining undetected for extended periods. ChatGPT can analyse real-time data and offer immediate recommendations, enabling security teams to respond swiftly and make data-driven decisions. By leveraging existing data, ChatGPT provides valuable insights that enhance decision-making and overall response effectiveness.

Moreover, ChatGPT can automate actions during a threat response. Traditionally, cybersecurity professionals spend significant time coding for automated responses in SOAR applications. However, ChatGPT can generate code for SOAR tools much faster, eliminating hours of manual work.

Once set up, the tool can automate repetitive tasks and respond to specific threats without human intervention. It can also generate incident reports promptly and accurately, utilising data collected during the event. Drawing information from multiple sources, ChatGPT offers a comprehensive view of the incident, assisting in investigations and delivering results to company leaders and stakeholders, resulting in a streamlined and efficient incident response system.

-

Enhanced Security Awareness and Training

ChatGPT is a valuable tool for enhancing security awareness training within your organisation. By utilising system training data and network information, it can generate accurate reports on cybersecurity events. Leveraging its NLP capabilities, ChatGPT can create highly effective training materials. For instance, it can provide detailed explanations of specific attack methods or even simulate real-life attack scenarios. With its NLP and translation capabilities, ChatGPT can also generate phishing emails as valuable examples during employee training.

It's crucial to address human error in cybersecurity, as nearly 9 out of 10 data breaches result from such mistakes. In the ever-evolving threat landscape, where cyberattacks increasingly target individuals to bypass technical defences, keeping employees well-informed requires engaging and informative training materials. ChatGPT can assist in producing such materials to enhance both security awareness and training efforts.

-

Optimised Security Frameworks and Processes

ChatGPT offers valuable capabilities for strengthening security policies and procedures within your organisation. With access to extensive threat data, it can contribute to creating comprehensive policies that meet specific criteria. By leveraging its NLP capabilities, cybersecurity leaders can direct ChatGPT to generate customised policies tailored to their company's needs, industry risks, and compliance requirements. Existing policies can also serve as a foundation for updates, streamlining the process of producing detailed and tailored documents.

Effective cybersecurity requires proactive preparation. Relying on vague plans and a reactive approach is inadequate in today's threat landscape. Keeping cybersecurity policies up to date ensures a shared understanding among employees and stakeholders on how to respond to threats and active attacks. As new threats emerge, policies must evolve to address the evolving nature of cyberattacks. But this ongoing process can be challenging to manage manually. ChatGPT can automate these tasks, enabling organisations to implement timely updates and enhance their overall cybersecurity posture.

ChatGPT: Shaping the Future of Cybersecurity

The rise of ChatGPT and other generative AI models has sparked both fascination and concern. With the continuous evolution of AI systems and the entry of new players in the market (Bing launched their own generative AI in early March, and Meta is finalising a powerful tool of their own, with more coming from other tech giants), the landscape of cyberattacks is poised to change. But the power of ChatGPT is not limited to malicious actors alone; it also holds tremendous potential for positive applications.

As cybersecurity and tech leaders grapple with the implications of ChatGPT and the expanding generative AI market, it becomes crucial to begin thinking about what it means for their organisations and society as a whole. This goes beyond simply retraining and reequipping their teams; it requires a fundamental shift in mindset and attitudes towards AI.

Rather than viewing ChatGPT with fear, both organisations and cyber/tech leaders should embrace it as a valuable addition to their cybersecurity arsenal to ensure they stay ahead in the AI era. By understanding its capabilities and harnessing its potential, we can shape a future where ChatGPT contributes to a safer digital landscape and enables us to tackle emerging threats effectively.

How to Improve Cybersecurity in Your Organisation

The most effective cybersecurity strategies are implemented by the best specialist tech teams. For over 26 years, we’ve recruited thousands of IT contractors and cybersecurity experts across 40 countries – find out more about our award-winning recruitment services.

Read The Future of AI – Head of Deloitte AI Institute’s Expert Predictions

[1] A technique for attempting to acquire sensitive data, such as bank account numbers, through a fraudulent solicitation in email or on a website, in which the perpetrator masquerades as a legitimate business or reputable person.